1. Why Your Current Workflow is Inefficient

If you're a developer, researcher, or data scientist, you know that raw subtitle data from YouTube is useless. It’s a swamp of ASR errors, messy formatting, and broken timestamps. This guide is for those who need advanced YouTube Subtitle Data Preparation—the tools and methods to convert noise into clean, structured data ready for LLMs, databases, and large-scale analysis. We cover everything from bulk processing to bypassing the YouTube API.

You cannot manually clean thousands of files. You also can't afford the YouTube Data API quota limits.

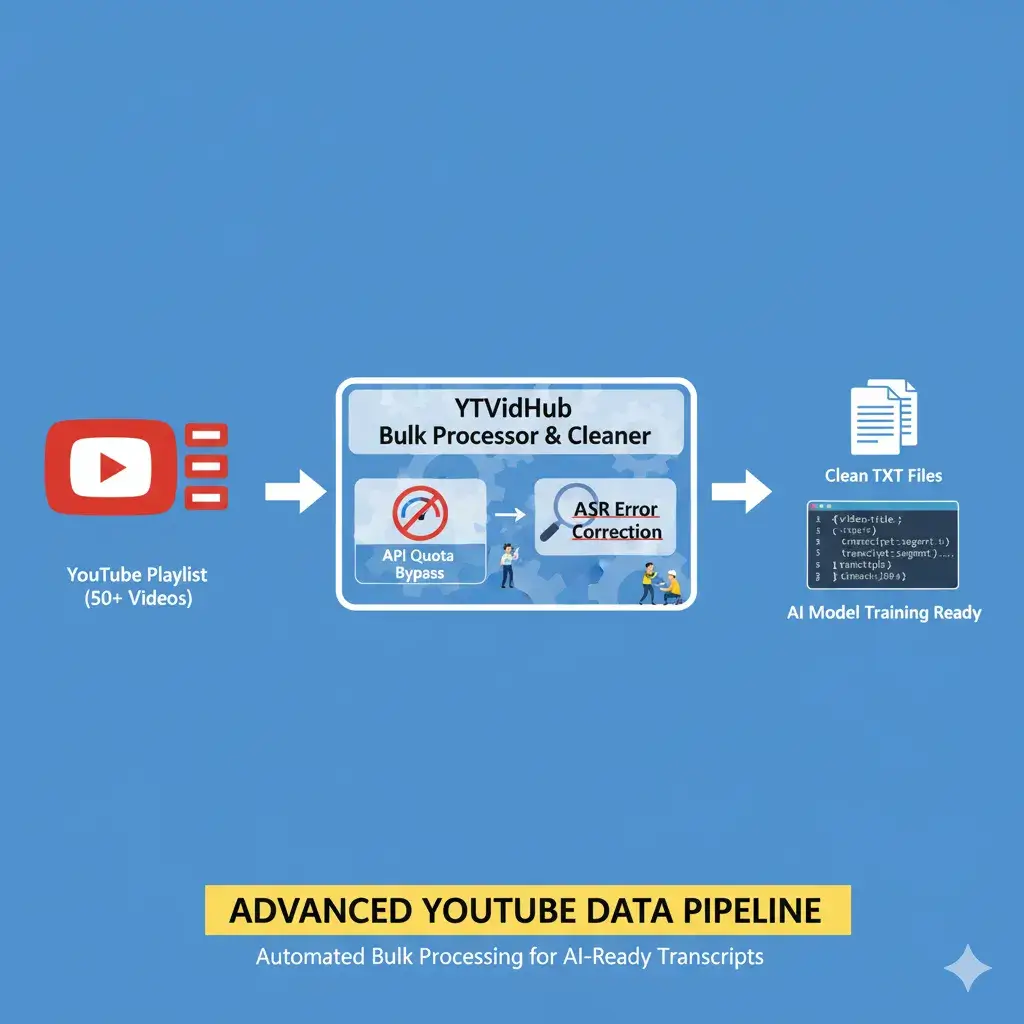

The Essential Workflow: Bulk Processing and Cleaning

If you need data from 50+ videos, you need batch processing. Downloading a YouTube playlist with subtitles manually is a non-starter. Our toolkit centers around this efficiency bottleneck.

The Case for a Truly Clean Transcript

A youtube transcript without subtitles is often just raw output riddled with errors. Our method ensures the final output is 99% clean, standardized text, perfect for training AI models.

2. The Power of Batch: Downloading Subtitles from an Entire Playlist

This is the only way to scale your project.

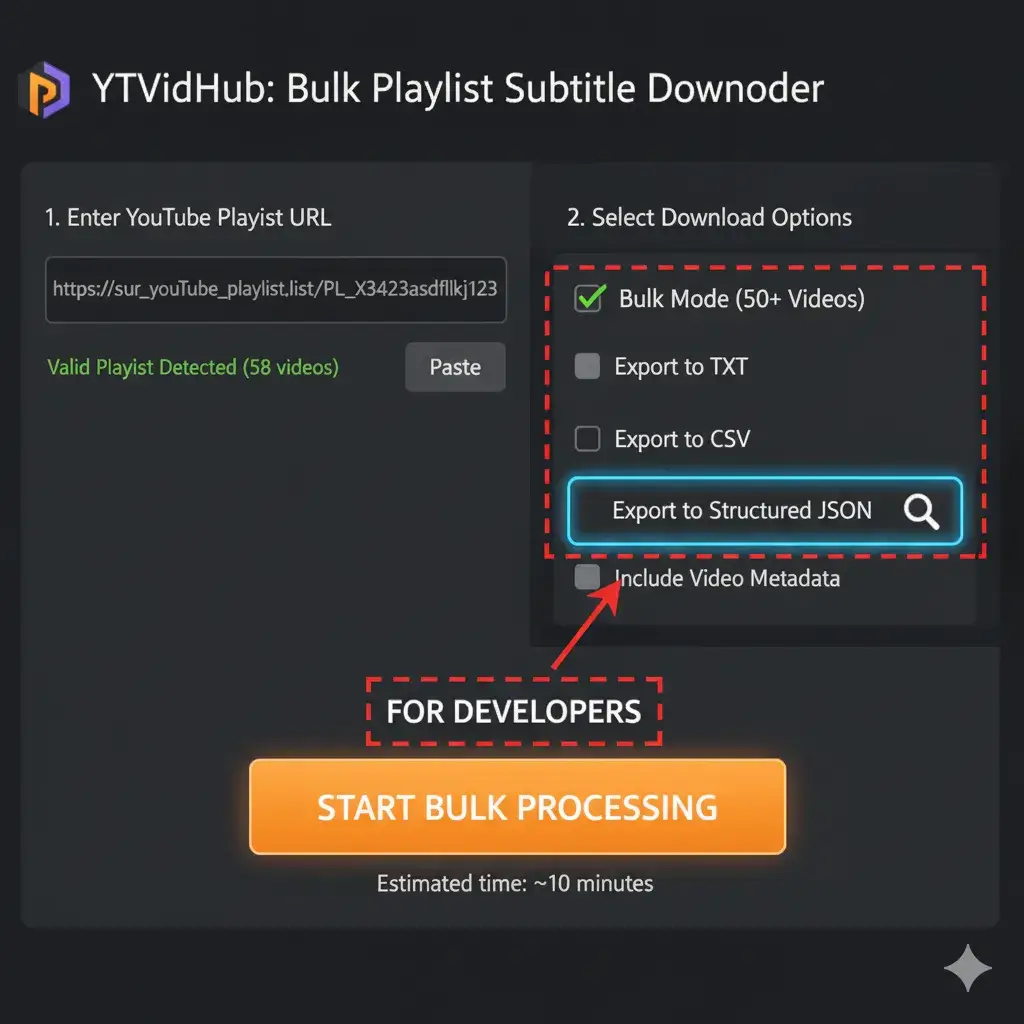

Step 1: Paste the Playlist URL and Select Bulk Mode

Simply input the playlist URL. Our tool queues every video in the list automatically.

Step 2: Choose Your Structured Output (Clean TXT or JSON)

Developers demand structured data. We offer JSON export with segment IDs and clean text fields, acting as a free YouTube API alternative.

Visualizing the Bulk Workflow:

- **Activate Bulk Mode:** A screenshot highlights the toggle or button used to switch from single URL to playlist/channel processing.

- **Select Output Format:** The UI shows a dropdown where a user selects 'JSON (Structured)' instead of the default 'TXT'. The tooltip explains the benefit for developers.

- **Initiate Download:** The final image shows the "Download .ZIP" button glowing or active, indicating that multiple files are ready to be packaged and downloaded.

3. Bypassing the YouTube API with Structured JSON

Why pay hundreds of dollars in API quota when you only need the text?

The yt-dlp Alternative: When Code Is Necessary

For terminal power users, tools like youtube-dl get subtitles are excellent, but they still require cleaning scripts. Our tool automates the cleaning before the download, saving you days of custom scripting. This makes our output superior to what raw youtube-dl subtitles only provides.

Real-World Impact: Cost & Time Savings

- Cost Reduction (Project A): A 5,000-video data pull via the official YouTube API was estimated to cost over $500 in quota fees. The same operation with our toolkit used a flat credit package, resulting in over 80% cost savings.

- Time Savings (Project B): A researcher spent 3 hours processing a 100-video playlist using `yt-dlp` and a custom Python cleaning script. Our one-click bulk tool delivered a cleaner, ready-to-use dataset in under 5 minutes.

- Labor Efficiency (Project C): An NLP team reported an average of 8 hours of manual post-cleaning for every 1,000 raw transcripts. Our pre-cleaned output reduced this to just 30 minutes of final validation.

4. Expert Insight: The Summarizer Myth

I see people searching for a youtube video summarizer ai without subtitles. This is fundamentally flawed.

Any AI summarizer, including the best ones, is only as good as the input data. If your input is a raw, ASR-generated transcript, your summary will be riddled with errors and incoherent structure. You must clean the data first. Our core value is providing the clean input that makes AI tools actually useful.

Critical View: Why "Garbage In, Garbage Out" Cripples AI Summarizers

When an AI summarizer is fed raw ASR transcripts, it cannot distinguish between meaningful content and noise. Errors like "[Music]", misidentified technical terms ("API" becomes "a pie"), and run-on sentences are interpreted as factual. The resulting summary isn't just inaccurate; it's often nonsensical and actively misleading. The data preparation step isn't optional—it's the foundation of any reliable AI-driven analysis.

5. Conclusion & Action Call

Data prep is the invisible 90% of any successful data project. Stop settling for messy output that costs you time and money. Our toolkit is designed by data professionals, for data professionals.