If you're reading this, you’re past the point of casual YouTube viewing. You understand that video subtitles are not just captions; they are raw, structured data. Whether you’re a developer training a small language model, a data scientist running topic modeling, or an academic researcher scraping video content, your data pipeline is only as reliable as your input. This guide is for the professional who demands data hygiene at the source.

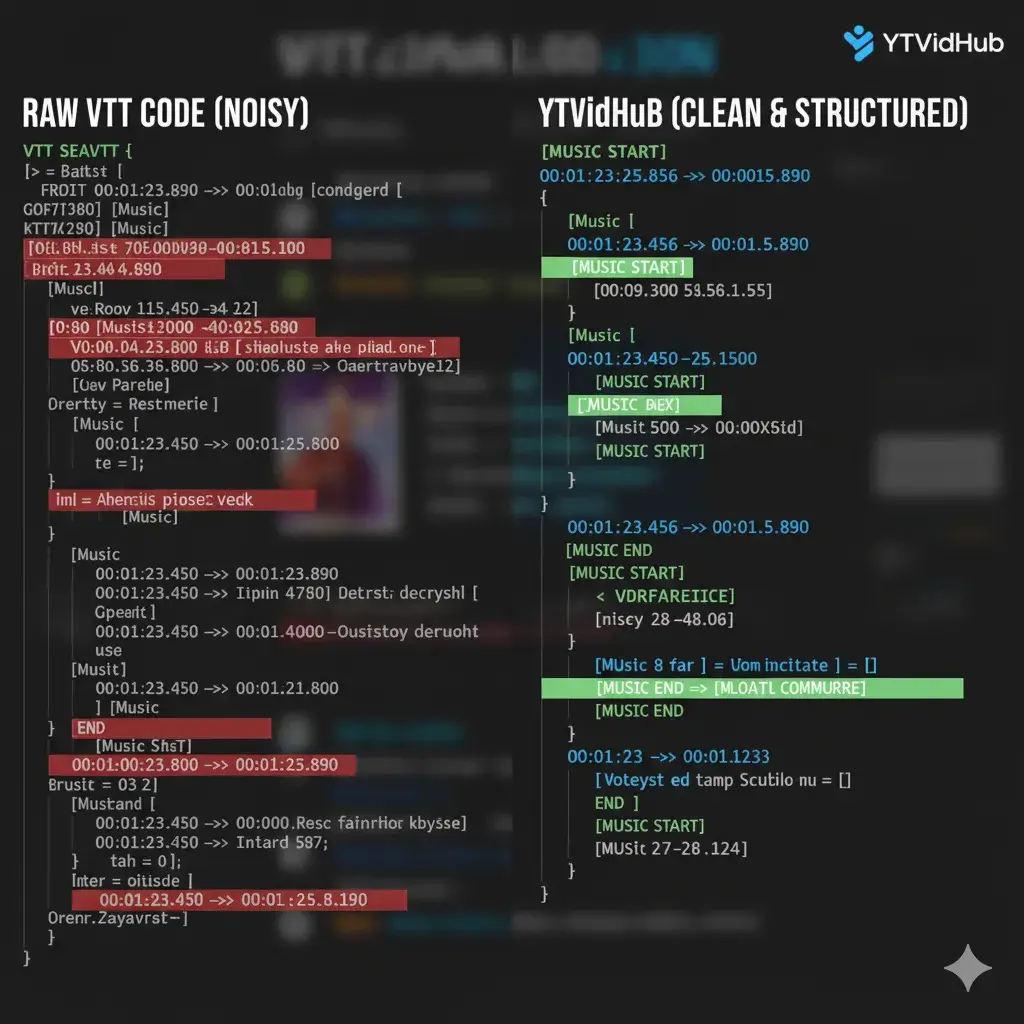

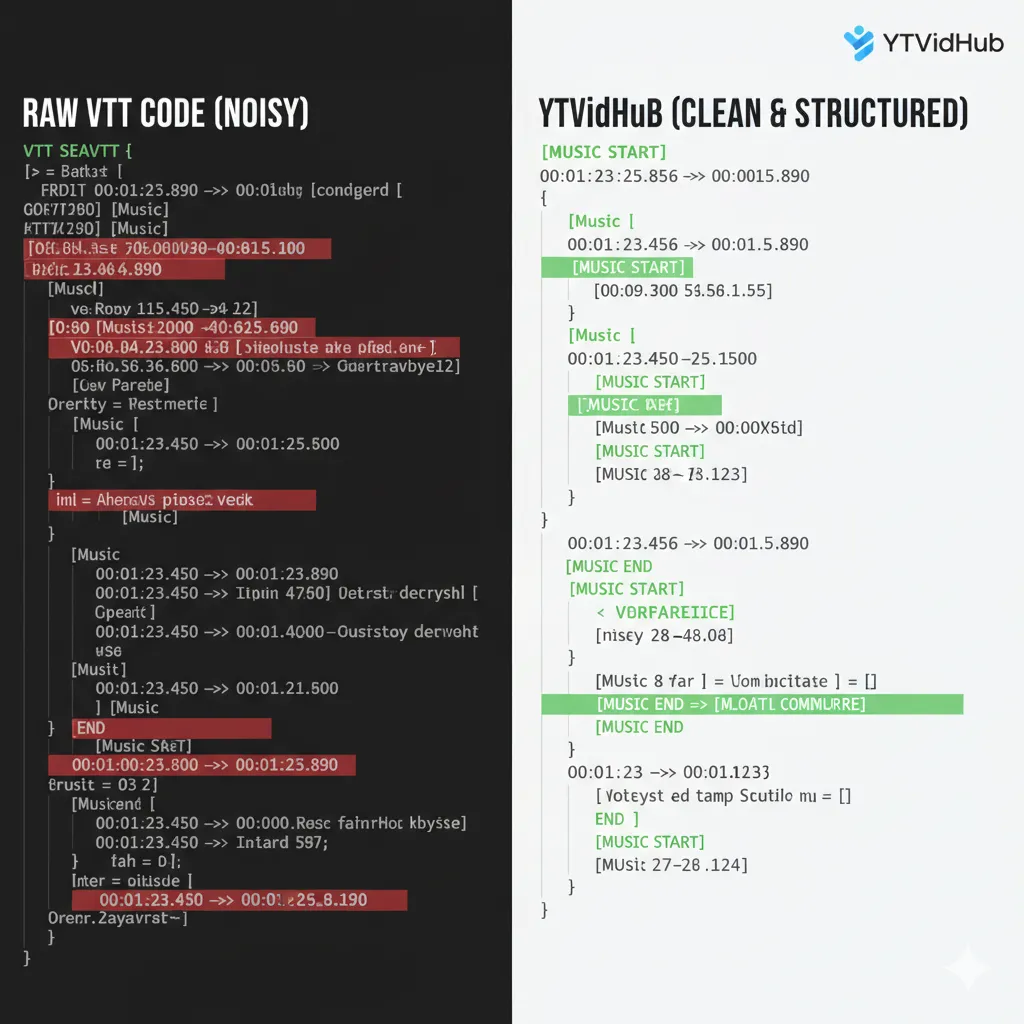

1. The VTT Data Quality Crisis: Why Raw Files Are Poison for Analysis

The standard WebVTT (.vtt) file downloaded from

most sources is toxic to a clean database. It contains layers of

metadata, timecodes, and ASR noise markers that destroy the

purity of the linguistic data.

WEBVTT

Kind: captions

Language: en

1:23.456 --> 1:25.789 align:start position:50%

[Music]

1:26.001 --> 1:28.112

>> Researcher: Welcome to the data hygieneYour time is the most expensive variable in this equation. If you are still writing regex scripts to scrub this debris, your methodology is inefficient. The solution isn't better cleaning scripts; it’s better extraction.

Real-World Performance Data:

When running preliminary tokenization tests on a corpus of 50 technical conference talks (totaling 12 hours of VTT data), the raw files required an average of 5.1 seconds per file just for regex scrubbing. After switching to YTVidHub's clean VTT output, the preprocessing time dropped to 0.3 seconds per file—a throughput gain of nearly 17x that allowed us to scale the dataset five times larger in the same week.

2. WebVTT (.vtt) vs. SRT: Choosing the Best Format for Developers

The debate between WebVTT (.vtt) and SubRip

(.srt) is crucial for data professionals. While SRT

is simpler, VTT is the native standard for HTML5 media players.

| Feature | SRT (.srt) | WebVTT (.vtt) | Why It Matters for Data |

|---|---|---|---|

| Structure | Sequential Index & Timecode | Timecode + Optional Metadata | Metadata adds complexity but can be useful for speaker analysis if preserved cleanly. |

| Punctuation | Basic, less flexible | Supports advanced markers | Better potential for complex scripts (though YTVidHub cleans it for text mining). |

| Web Standard | Informal | W3C Standard | Essential for developers integrating transcripts into web apps or custom players. |

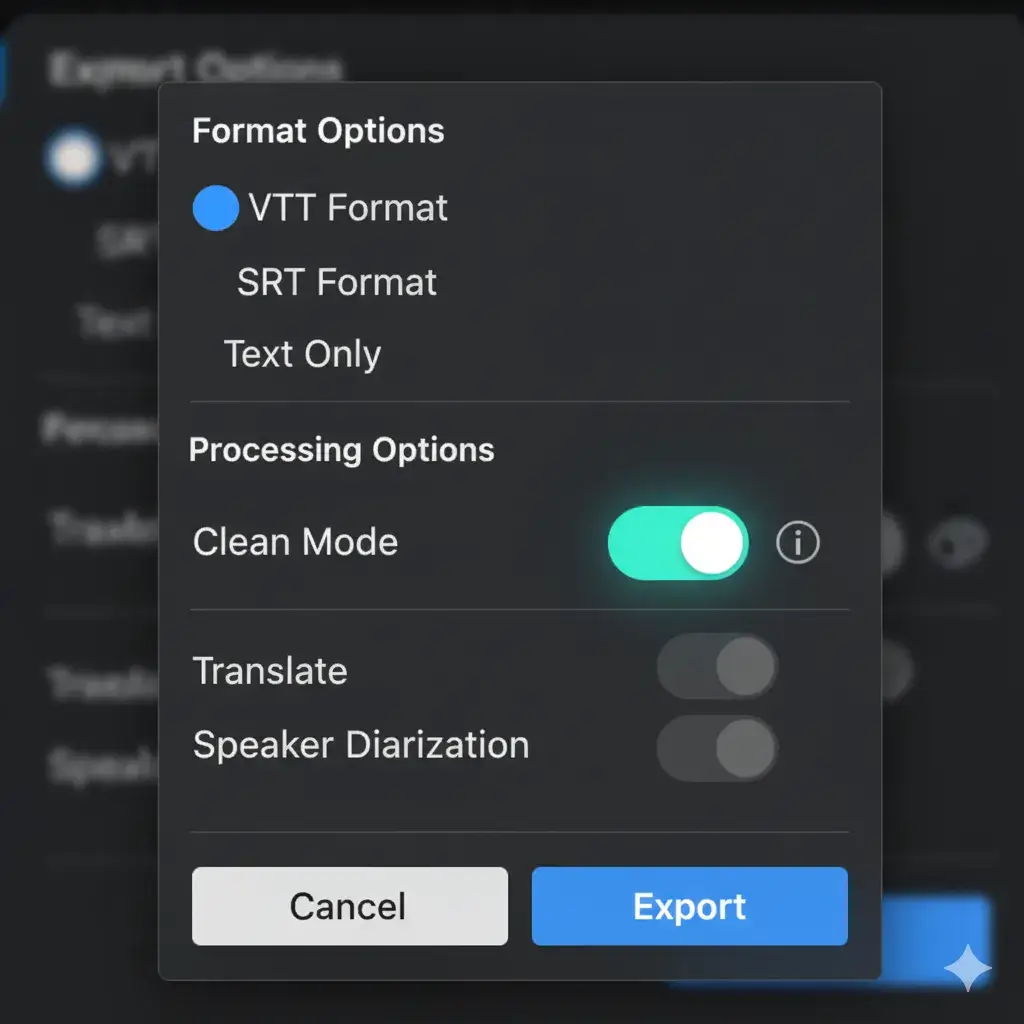

The Clean VTT Workflow

The key to reliable bulk extraction is ensuring you select the correct format before processing begins. Note the explicit selection of VTT paired with the Clean Mode toggle below:

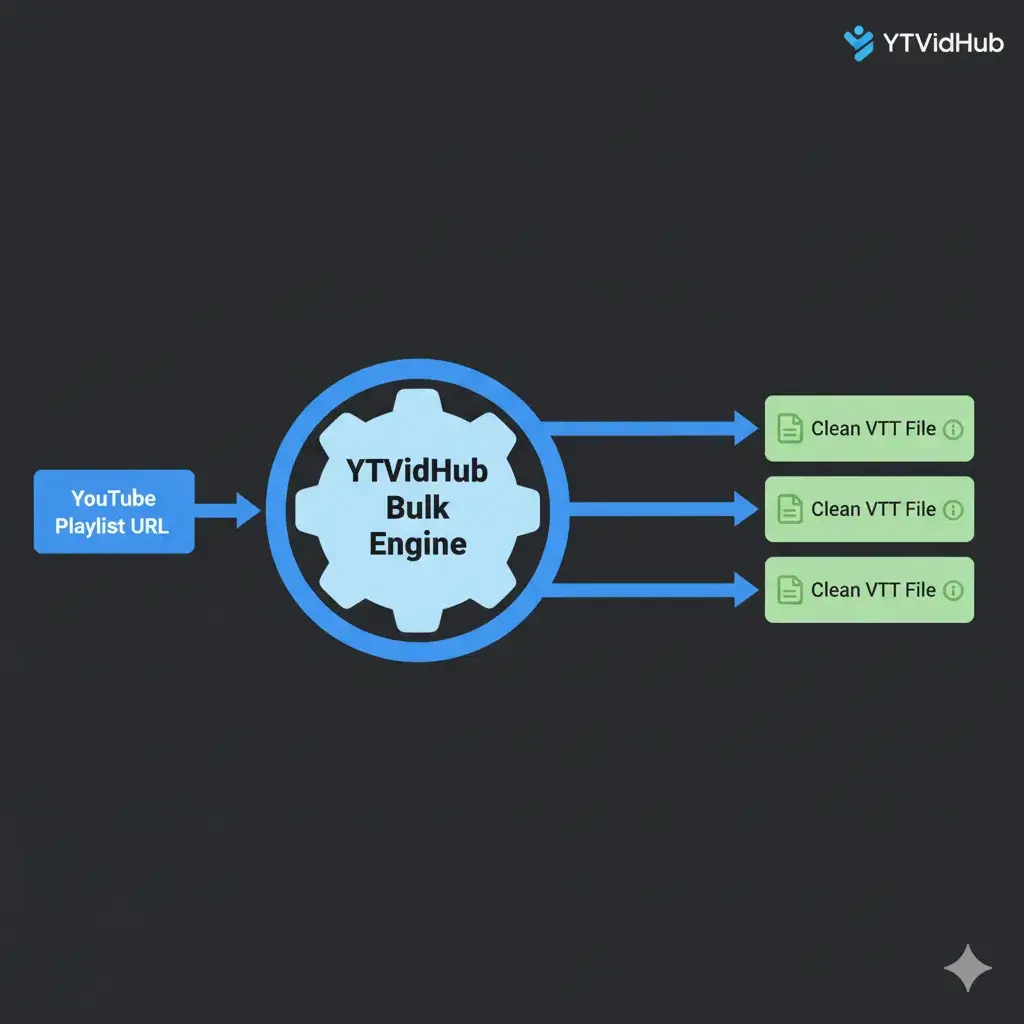

3. Bulk Downloader Strategies: Bypassing the API Quota Wall

Your research project requires not one VTT file, but one hundred. This is where the standard online tool and the YouTube Data API become catastrophic workflow bottlenecks.

Critical Insight: The True Cost of 'Free' APIs

Relying on the official YouTube Data API for bulk subtitle acquisition is a fundamentally flawed, $O(N^2)$ solution for modern data scientists. You're not only spending valuable quota dollars per request, but you are also paying a developer to write the complex cleaning script that YTVidHub executes internally for free. It is the classic case of paying more to receive significantly less clean data.

4. Step-by-Step Guide: How to Download Clean VTT Subtitles

- Input the Target: For a single video, paste the URL. For a bulk download, paste the Playlist URL or a list of individual video IDs.

- Configure Output: Set the target language, set the format to VTT, and enable the Clean Mode.

- Process and Wait: For large playlists, you will receive an email notification when your ZIP file is ready.

- Receive Structured Data: The final ZIP package contains every VTT file, pre-cleaned, organized, and ready for your processing script.

5. The VTT Output: Ready for Topic Modeling and AI

- Tokenization: Feed text directly into a custom LLM or embedding pipeline without wasting tokens on noise.

- Topic Modeling: Run clustering algorithms to identify dominant themes across a video series, unimpeded by time stamps.

- Structured Export: Easily convert the clean VTT into a clean JSON object for database storage.