When we introduced the concept of a dedicated Bulk YouTube Subtitle Downloader, the response was immediate. Researchers, data analysts, and AI builders confirmed a universal pain point: gathering transcripts for large projects is a "massive time sink." This is the story of how community feedback and tough engineering choices shaped YTVidHub.

1. The Bulk Challenge: Scalability Meets Stability

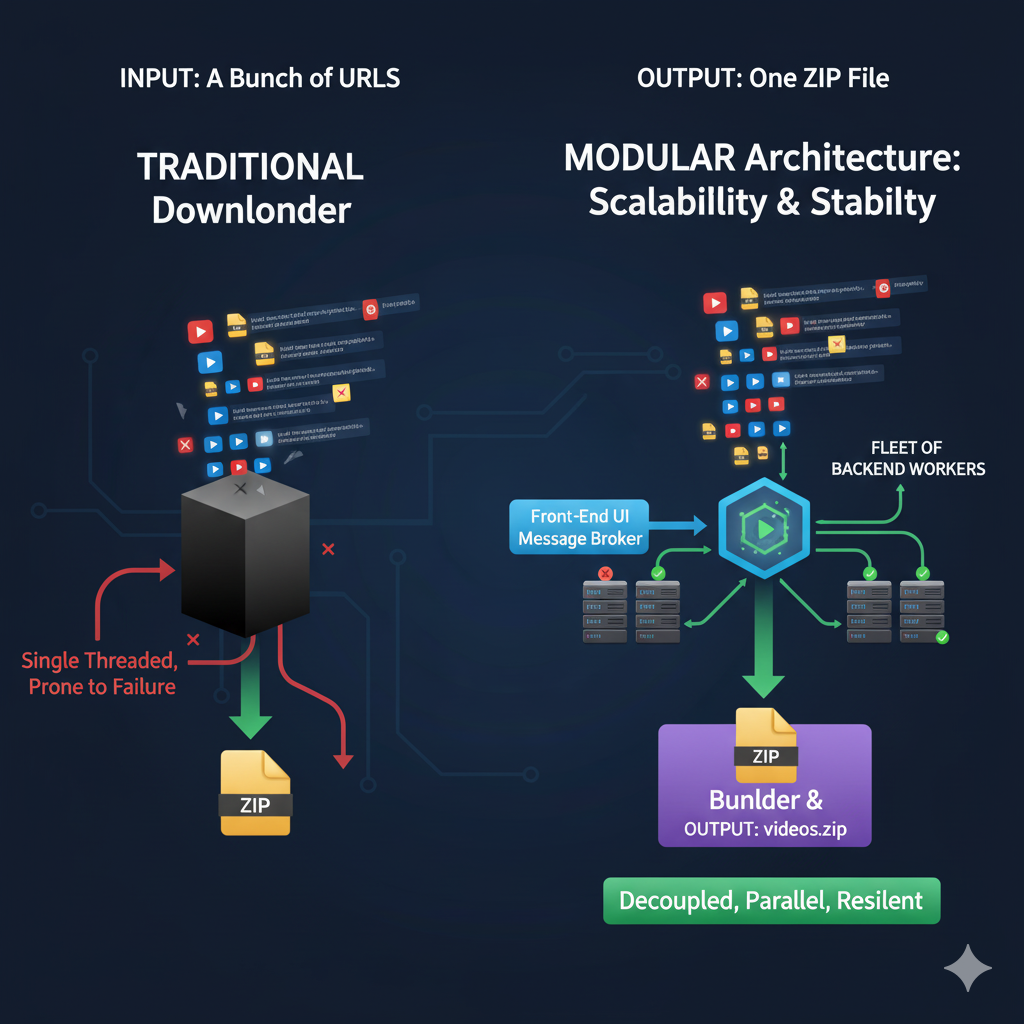

The primary hurdle for a true bulk downloader isn't just downloading one file; it's reliably processing hundreds or thousands simultaneously without failure. We needed an architecture that was both robust and scalable.

Our solution involves a decoupled, asynchronous job queue. When you submit a list, our front-end doesn't do the heavy lifting. Instead, it sends the list of video IDs to a message broker. A fleet of backend workers then picks up these jobs independently and processes them in parallel. This ensures that even if one video fails, it doesn't crash the entire batch.

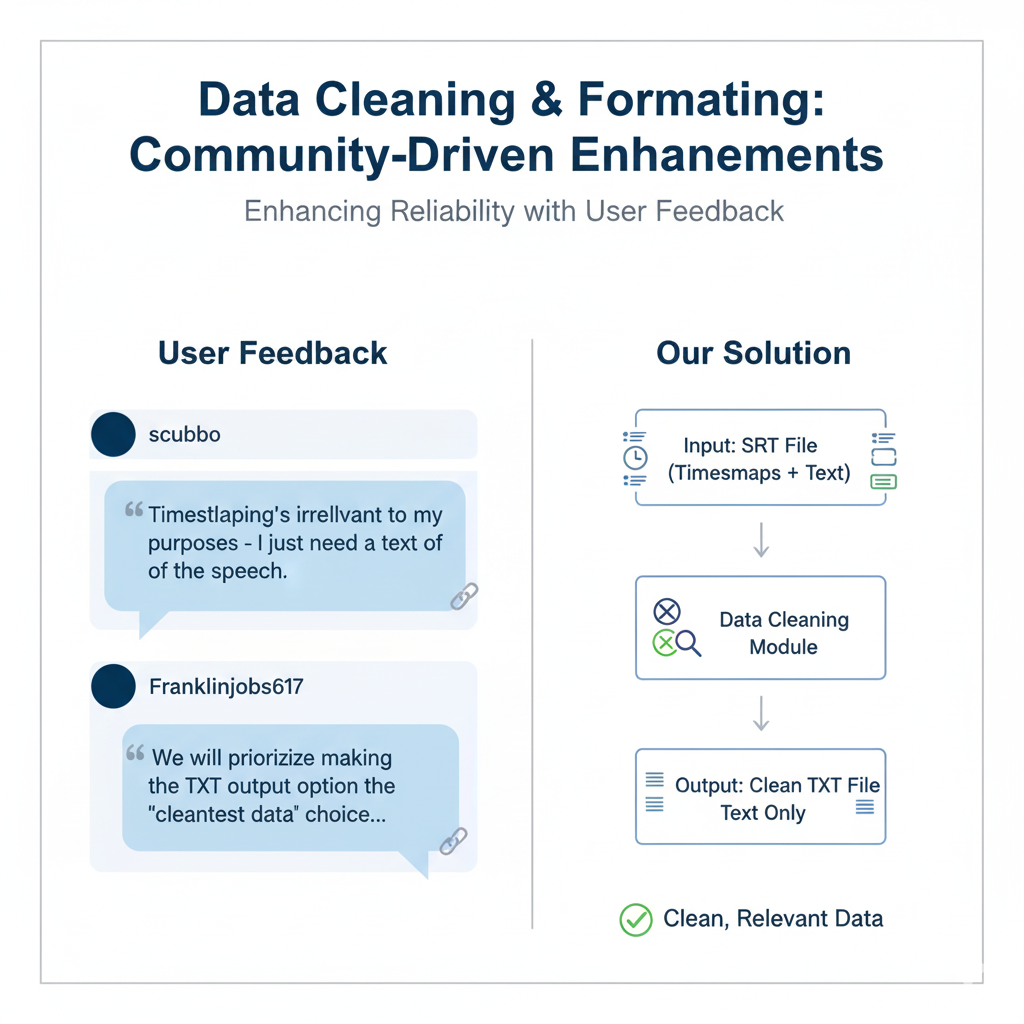

2. The Data Problem: More Than Just SRT

For most analysts, raw SRT files—with timestamps and sequence numbers—are actually "dirty data." They require an extra, tedious pre-processing step before they can be used in analysis tools or Retrieval-Augmented Generation (RAG) systems.

This direct feedback was a turning point. We made a crucial decision: to treat the **TXT output as a first-class citizen**. Our system doesn't just convert SRT to TXT; it runs a dedicated cleaning pipeline to strip all timestamps, metadata, empty lines, and formatting tags. The result is a pristine, analysis-ready block of text, saving our users a critical step in their workflow.

3. The Accuracy Dilemma: A Two-Pronged Strategy

The most insightful feedback centered on data quality. While YouTube's auto-generated (ASR) captions are a fantastic baseline, they often fall short of the accuracy provided by high-end AI models. This presents a classic "Accuracy vs. Cost" dilemma.

Phase 1: The Best Free Baseline (Live Now)

Our core service provides unlimited bulk downloading of all official YouTube-provided subtitles (both manual and ASR) for Pro members. This establishes the **best possible baseline data**, accessible at an unmatched scale and speed.

Phase 2: The Pro Transcription Engine (In Development)

For projects where accuracy is non-negotiable, a more powerful solution is needed. Our upcoming **Pro Transcription** tier is being built to solve this:

- State-of-the-Art Models: We're integrating models like OpenAI's Whisper and Google's Gemini for transcription that rivals human accuracy.

- Contextual Awareness: You'll be able to provide a list of keywords, acronyms, and proper nouns (e.g., "GANs", "BERT", "PyTorch"). Our system will feed this context to the model, dramatically improving accuracy for specialized or technical content.

-

Intelligent Pre-processing: Using tools like

ffmpeg, we'll automatically detect and remove silent segments from the audio before transcription. This reduces processing time, lowers costs, and can even improve the model's focus on relevant speech.

Ready to Automate Your Research?

The unlimited bulk downloader and clean TXT output are live now. Stop the manual work and start saving hours today.