Clean Subtitles, Flawless Data

Data quality is non-negotiable for LLM training and academic research. Learn the professional method to remove timestamps and ASR noise for truly pristine text data.

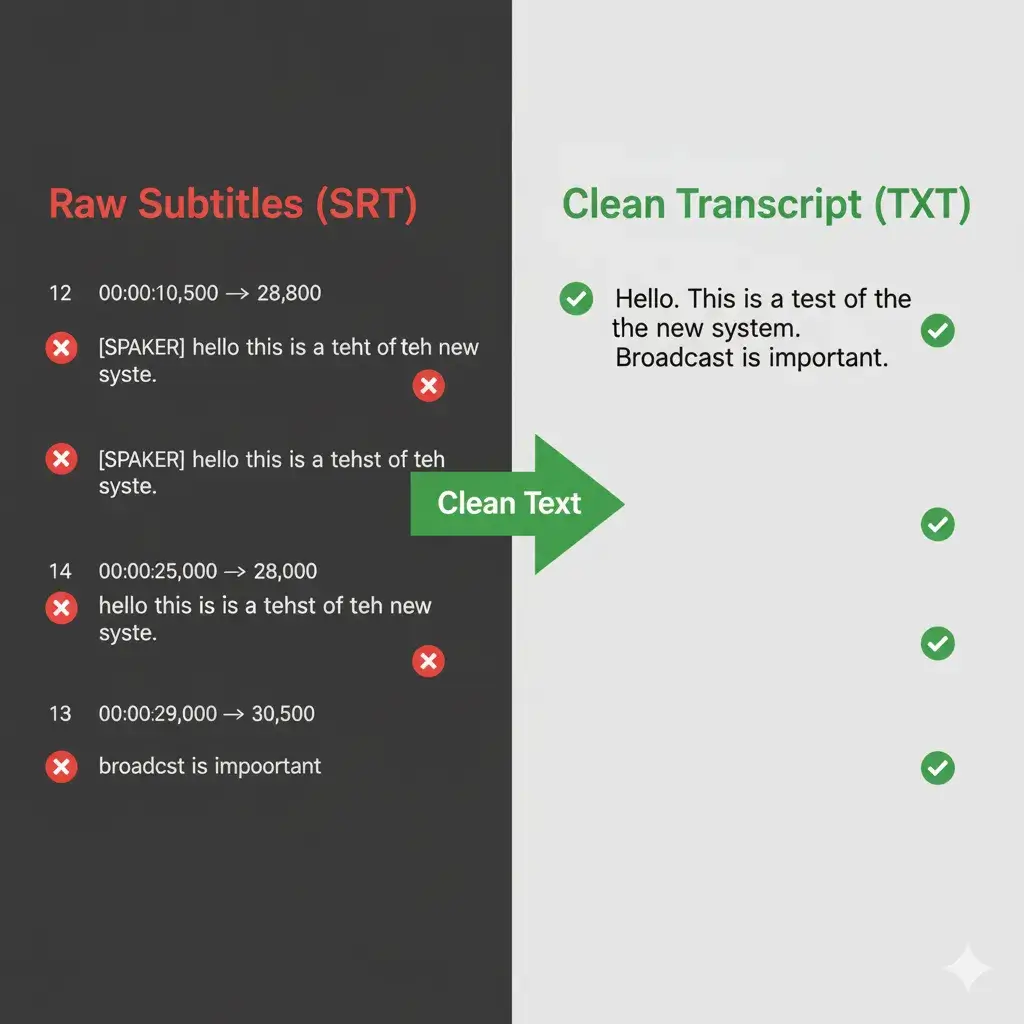

The Pitfall of Raw SRT/VTT Files

Standard subtitle files are poison for high-stakes projects. Feeding noisy data into your LLM or research model doesn't just lower quality—it actively compromises your results.

How Raw Data Corrupts Your Work:

-

Timestamps & Tags: Useless structural data that confuses models and requires tedious pre-processing.

-

ASR Noise: Filler words like "um," "uh," and non-speech tags like `[Applause]` pollute semantic meaning.

-

Inconsistent Formatting: Different videos produce varied outputs, creating a data-wrangling nightmare.

How Our "Clean Mode" Works

Our engine goes beyond simple find-and-replace. It uses a multi-stage parser to surgically remove noise and deliver pure, high-quality text.

1. Structural Tag Elimination

Precisely strips away all SRT/VTT timecodes, sequence numbers, and formatting tags without affecting the core text.

2. ASR Noise Filtering

Identifies and removes common Automatic Speech Recognition artifacts like `[Music]` or `[Applause]` tags.

3. Format Unification

Consolidates all text fragments into a single, continuous paragraph, ready for any text processor or model.

Ready for a Professional Workflow?

Cleaning individual files is just one part of the puzzle. Our complete guide covers bulk downloading, advanced formatting, and everything you need to build a robust data pipeline.