Introduction: The Hidden Cost of Dirty Data

Welcome to the definitive guide. If you are looking to scale your LLM or NLP projects using real-world conversational data from YouTube, you know the hidden cost isn't just time—it's the quality of your training set.

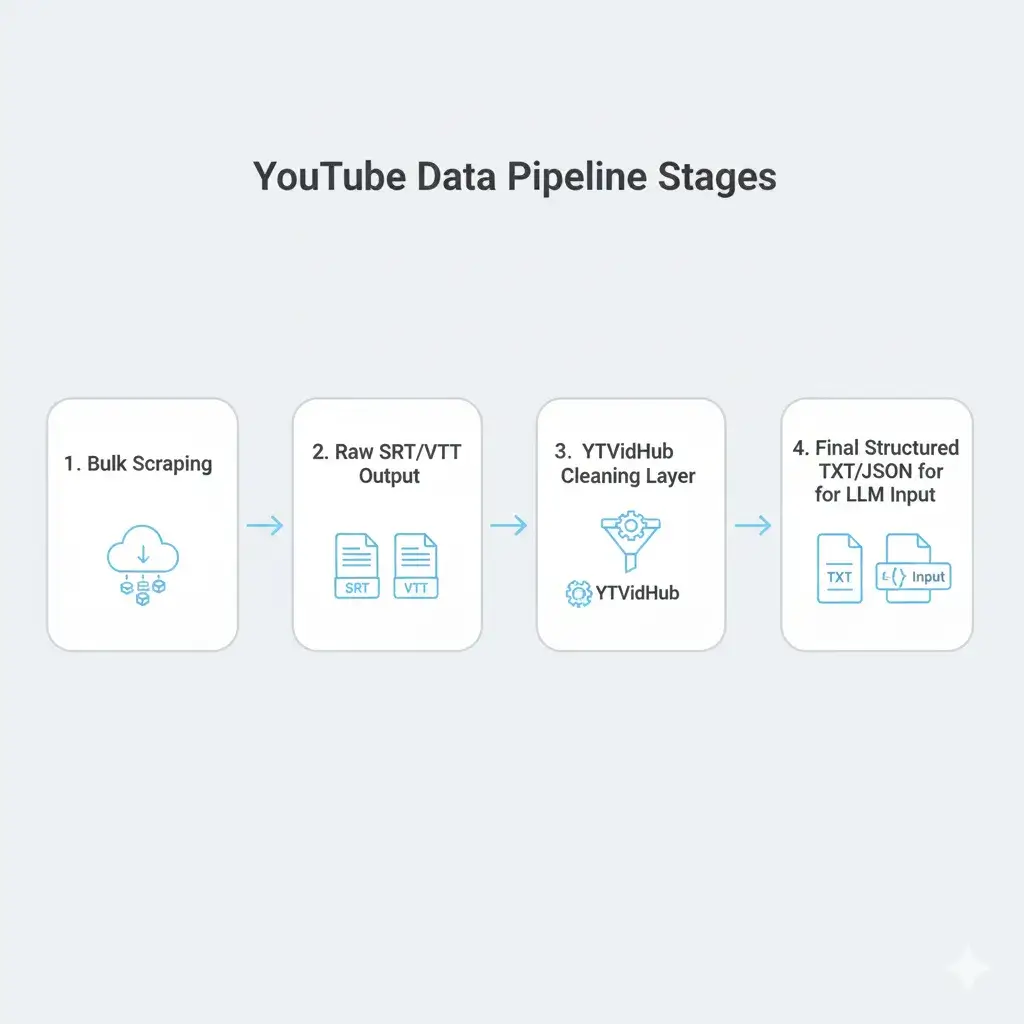

We built YTVidHub because generic subtitle downloaders fail at the critical second step: data cleaning. This guide breaks down exactly how to treat raw transcript data to achieve production-level readiness.

The Technical Challenge: Why Raw SRT Files Slow Down Your Pipeline

Many tools offer bulk download, but they often deliver messy output. For Machine Learning, this noise can be catastrophic, leading to poor model performance and wasted compute cycles.

The Scourge of ASR Noise

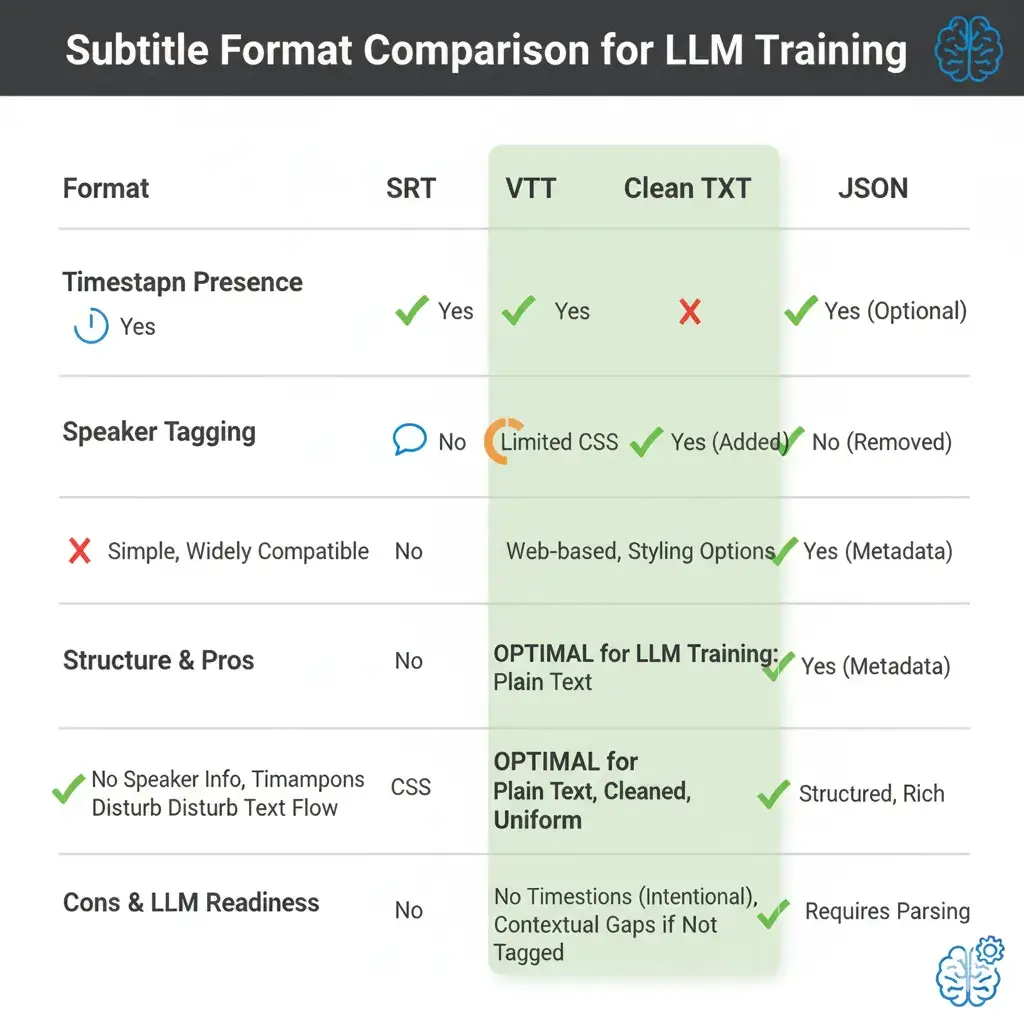

- Timestamp Overload: Raw SRT/VTT files are riddled with time codes that confuse tokenizers and inflate context windows unnecessarily.

- Speaker Label Interference: Automatically inserted speaker tags (e.g., `[MUSIC]`, `[SPEAKER_01]`) need removal or intelligent tagging to avoid polluting your dataset.

- Accuracy Discrepancies: The challenge of automatically generated subtitles requires a robust verification or cleaning layer to ensure data integrity.

Solution & Format Depth: From Bulk Download to Structured Data

The key to efficiency is integrating the download and cleaning steps into a seamless pipeline. This is where a dedicated tool shines over managing a complex web of custom scripts, which often become brittle and hard to maintain at scale.

💡 Pro Tip from YTVidHub:

For most modern LLM fine-tuning, a clean, sequential TXT file (like our Research-Ready TXT) is superior to timestamped files. Focus on data density and semantic purity, not metadata overhead.

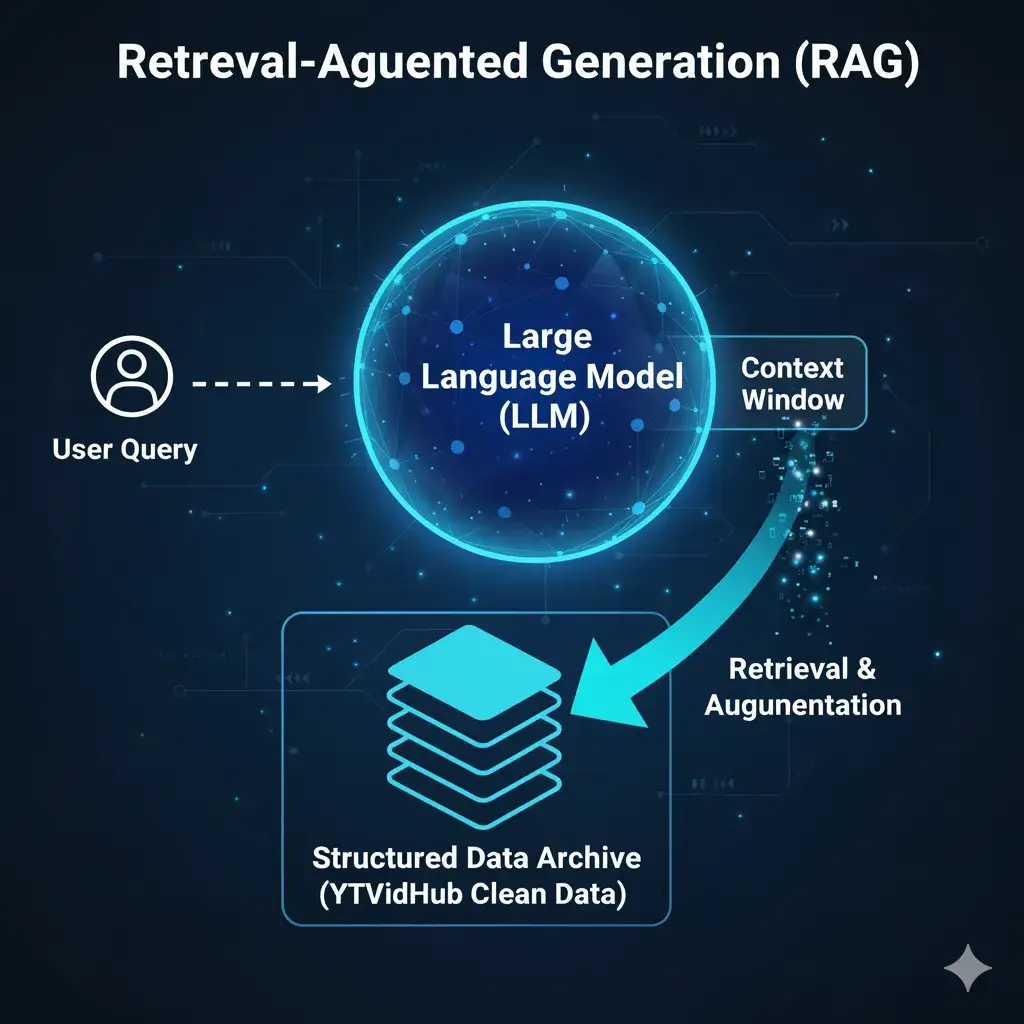

Case Studies: Real-World Application for RAG Systems

One of the most powerful applications of clean, bulk transcript data is in building robust Retrieval-Augmented Generation (RAG) systems. By feeding a large corpus of topical conversations into a vector database, you can provide your LLM with relevant, real-time context to answer user queries with high accuracy.

Ready to build your own RAG system?

Start by gathering high-quality data with a tool built for the job.

Use the Bulk Subtitle Downloader Now